We will generate zipped backup files to backup MySQL database and site files for every website by running a simple Bash script.

In order to backup MySQL and site files for any website, we need to create a Bash script to do all these tasks. In this article, we will show you how to do this step by step. Before proceeding with the instructions here, you need to establish a backup infrastructure which has been explained in the previous article.

1. Create a bash file (backup.sh) for the backup inside the master_backup directory by running the command:

root@mail:~# nano /var/master_backup/backup.sh2. Then, enter the below code inside the file:

#!/bin/bash

#==================================

# Change the below

#==================================

BACKUP_DIR="/var/master_backup"

EMAIL_ADDRESS="info@vpsprof.com"

# No need for the below, as we will delete based on the count number of files in each folder

KEEP_ALIVE_DAYS=90

# To keep 5(6-1) files in each folder

KEEP_ALIVE_COUNT=6

# For the database backup, CHECK THE CNF FILES USER AND PASSWORD BEFORE RUNNING THE BACKUP

ARRAY_DBUSER_DBNAME_backupFOLDER=(

root "--all-databases" all

sitebase_user sitebase_db sitebase

)

# For the site files

ARRAY_SITE_FOLDERS_NEED_BACKUP=(

sitebase

)

# To Backup the vmail

BACKUP_VMAIL=1

#==================================

# Change the above

#==================================

echo ""

echo "Database Backup of: $(date +\%F--\%T)"

# We use printf because it processes new lines, unlike echo

printf "\n"

# To give all files same date to enable the mutt to find them

NOW=$(date +\%F--\%T)

# DATABASE SECTION

#=================

for (( index=0; index<${#ARRAY_DBUSER_DBNAME_backupFOLDER[@]}; index+=3 )); do

db_user=${ARRAY_DBUSER_DBNAME_backupFOLDER[$index]}

mycnf=$BACKUP_DIR/.my.cnf-$db_user

db_name=${ARRAY_DBUSER_DBNAME_backupFOLDER[${index}+1]}

# For Database:

backup_folder=$BACKUP_DIR/databases/${ARRAY_DBUSER_DBNAME_backupFOLDER[${index}+2]}

new_zip_file_name=$backup_folder/${ARRAY_DBUSER_DBNAME_backupFOLDER[${index}+2]}"_db"-$NOW.zip

# Backup the databases

mysqldump --defaults-file=$mycnf -u $db_user $db_name | zip $new_zip_file_name -

# Send email

echo "$NOW" | mutt -s "Meal $db_name" -a "$new_zip_file_name" -- $EMAIL_ADDRESS

# To delete databases files older than x days to save space. For mints, use -mmin.

#find $backup_folder/* -mtime +$KEEP_ALIVE_DAYS -exec rm {} \;

# Below is for mints

#find $backup_folder/* -mmin +$KEEP_ALIVE_DAYS -exec rm {} \;

# To delete and keep certain number of copies in a folder

ls -t $backup_folder/* | tail -n +$KEEP_ALIVE_COUNT | xargs rm --

echo ""

echo "Export Details #$(($index/3+1)):"

echo ""

echo "DB_USER: $db_user"

echo ".my.cnf FILE: $mycnf"

echo "DB_NAME: $db_name"

echo "BACKUP_FILE: $new_zip_file_name"

echo ""

done

# SITE FILES SECTION

#===================

for (( index=0; index<${#ARRAY_SITE_FOLDERS_NEED_BACKUP[@]}; index+=1 )); do

backup_folder=$BACKUP_DIR/site_files/${ARRAY_SITE_FOLDERS_NEED_BACKUP[${index}]}

new_zip_file_name=$backup_folder/${ARRAY_SITE_FOLDERS_NEED_BACKUP[${index}]}"_site_files"-$NOW.zip

folder_to_zip=/var/www/html/${ARRAY_SITE_FOLDERS_NEED_BACKUP[${index}]}

zip -r $new_zip_file_name $folder_to_zip

# To delete site files older than x days to save space

#find $backup_folder/* -mtime +$KEEP_ALIVE_DAYS -exec rm {} \;

# To delete and keep certain number of copies in a folder

ls -t $backup_folder/* | tail -n +$KEEP_ALIVE_COUNT | xargs rm --

echo ""

echo "Zipped the file: $new_zip_file_name"

echo ""

done

# VMAIL FILES SECTION

#====================

if [[ $BACKUP_VMAIL =~ 1 ]]

then

backup_folder=$BACKUP_DIR/vmail_backup

new_zip_file_name=$backup_folder/"vmail"-$NOW.zip

folder_to_zip=/var/vmail

zip -r $new_zip_file_name $folder_to_zip

# To delete site files older than x days to save space

# find $backup_folder/* -mtime +$KEEP_ALIVE_DAYS -exec rm {} \;

# To delete and keep certain number of copies in a folder

ls -t $backup_folder/* | tail -n +$KEEP_ALIVE_COUNT | xargs rm --

fi

printf "\n\n"

echo "Have a good day!"

printf "\n\n"Please note that before and after the equal mark =, there should be no space, if you add any space, you will get a syntax error.

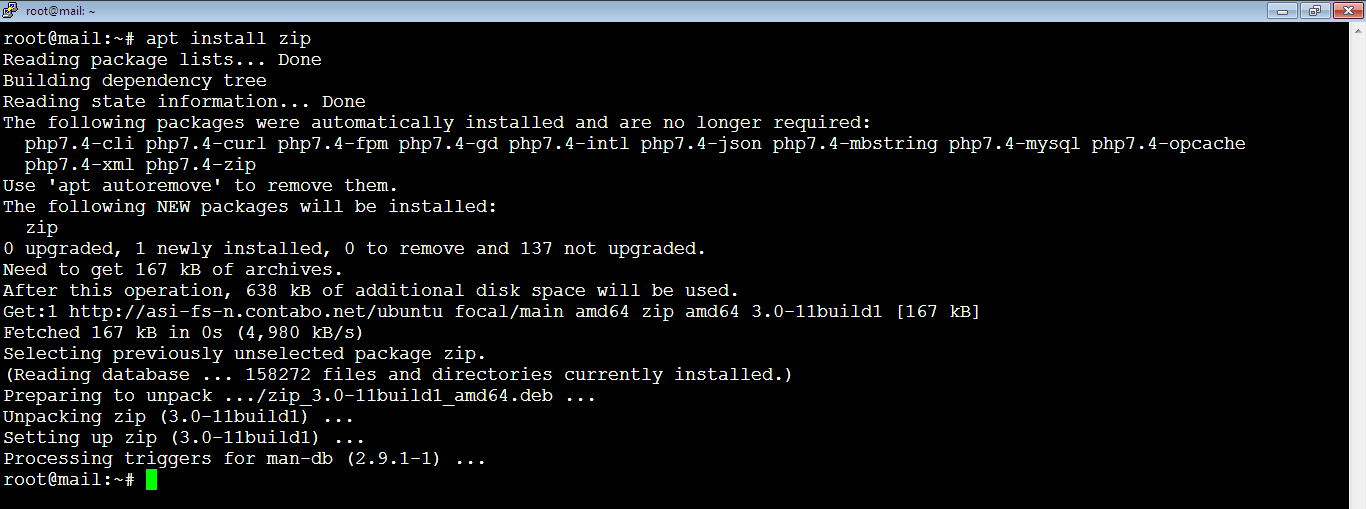

Install ZIP

3. Before we run the backup script above, we need to install Zip utility by running the command:

root@mail:~# apt install zipIn case you want to go to the base directory, just run the command:

root@mail:~# cd

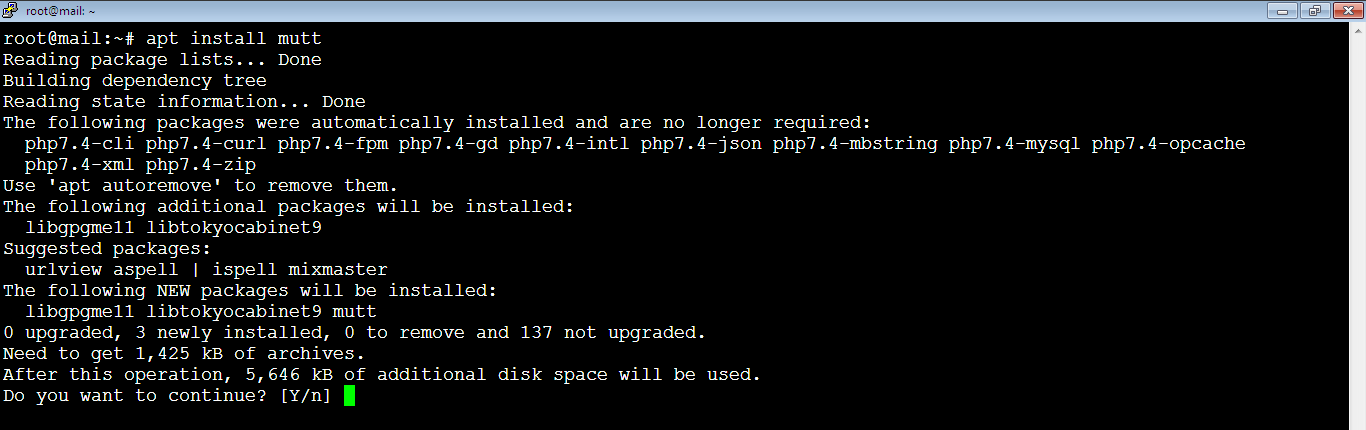

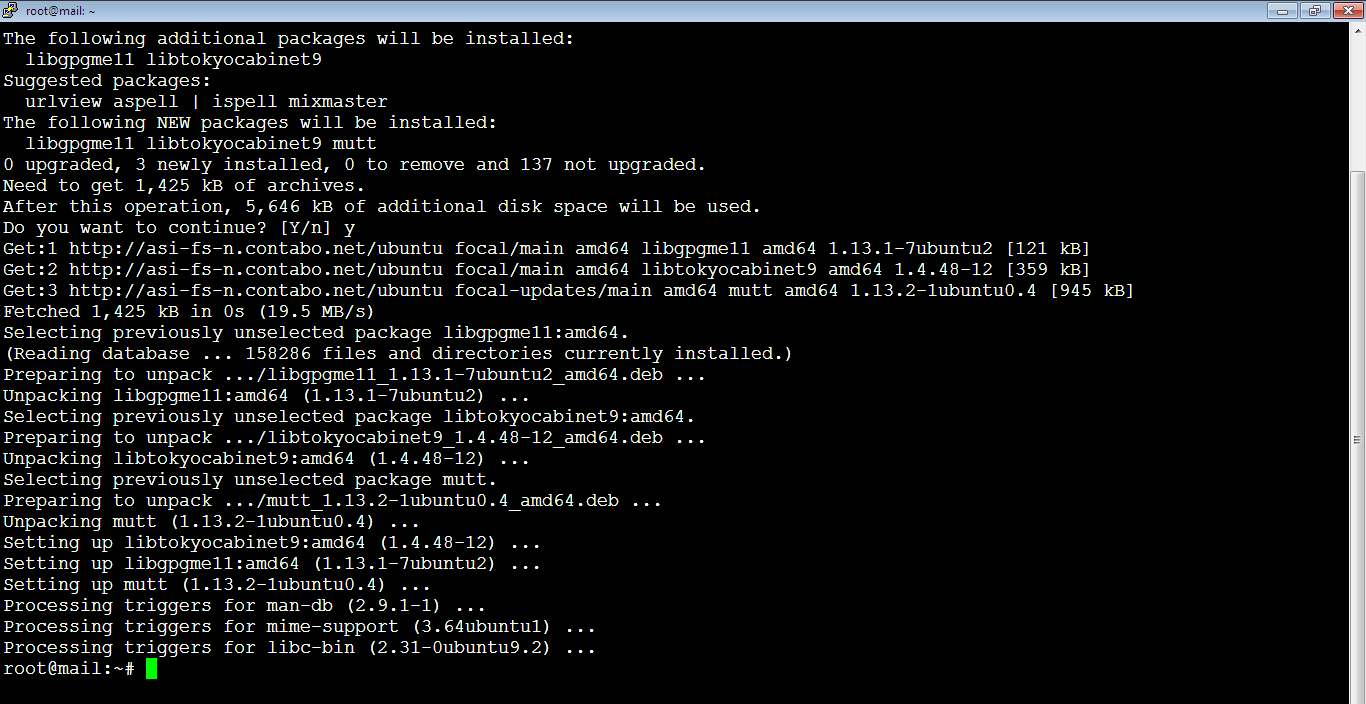

4. Also, we need to install the Mutt service which will enable us to send emails for the databases if their website is not grow up too much by running the below command:

root@mail:~# apt install muttYou will be asked to continue the setup, type: y

And here we go, installation succeeded.

5. Now it’s the time to run the backup bash script, run the command:

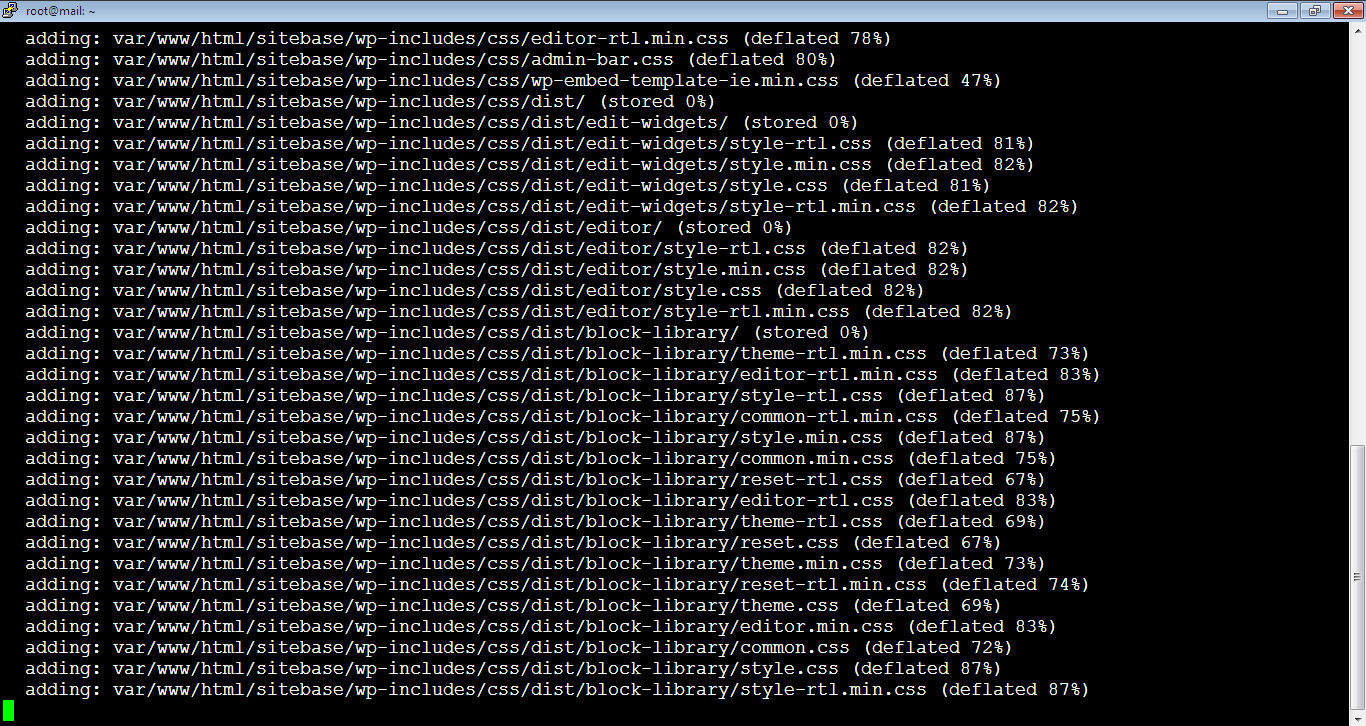

root@mail:~# bash /var/master_backup/backup.shOnce you run the command above, you will start seeing the progress of the backup as below. Linux will start zipping all folders and files:

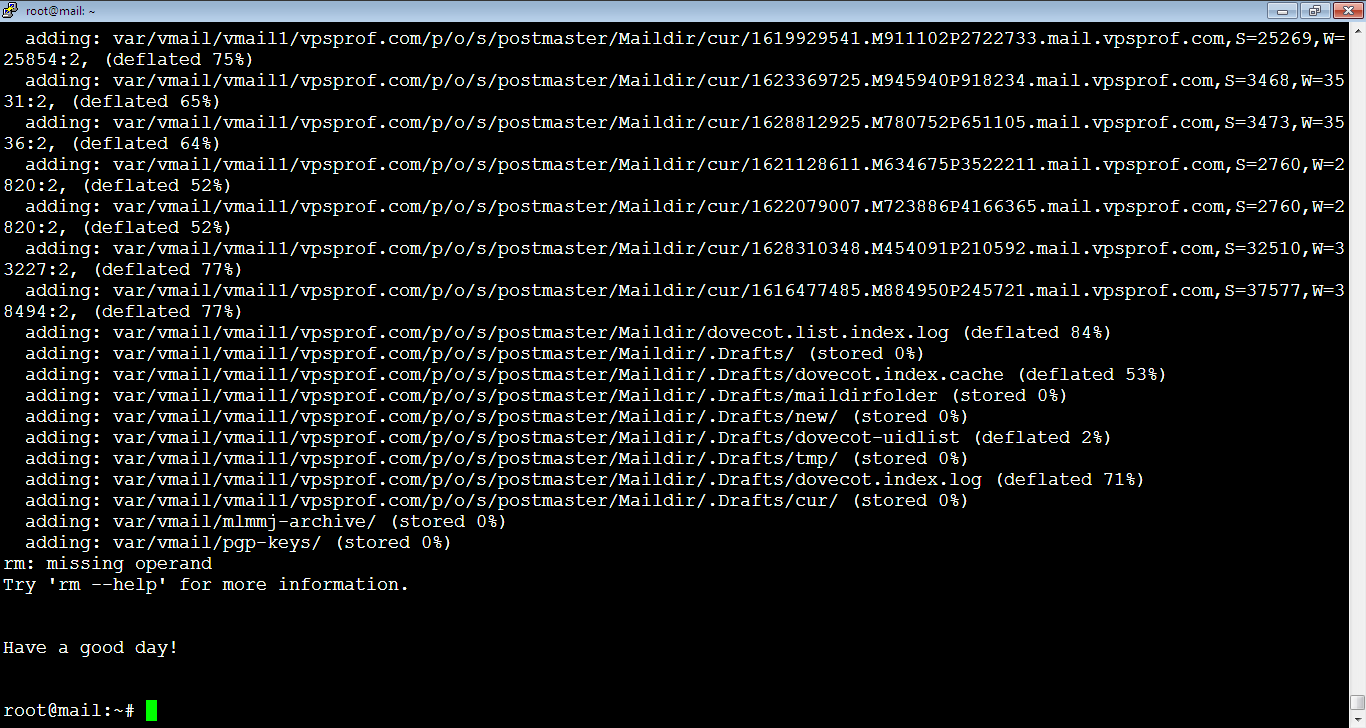

And here when it’s done:

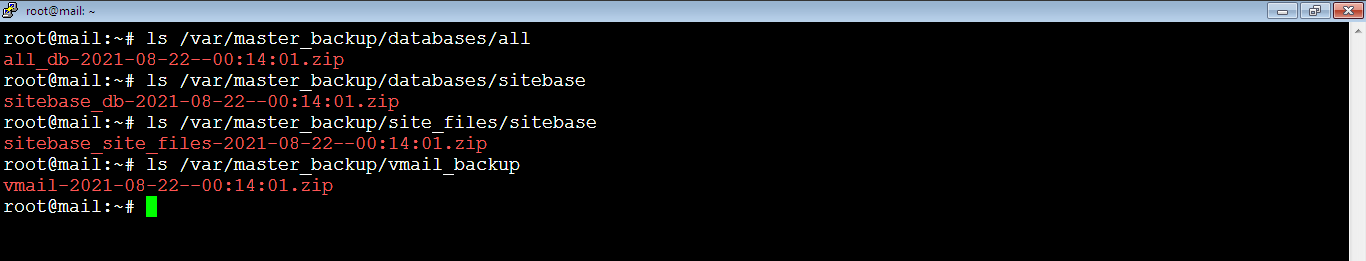

6. Make sure that the script has backed up all the folders we need, run the below commands:

root@mail:~# ls /var/master_backup/databases/allroot@mail:~# ls /var/master_backup/databases/sitebaseroot@mail:~# ls /var/master_backup/site_files/sitebaseroot@mail:~# ls /var/master_backup/vmail_backupHere’s the result, it worked!

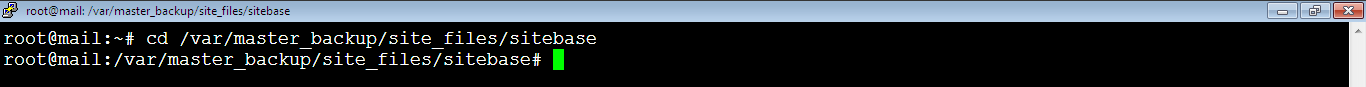

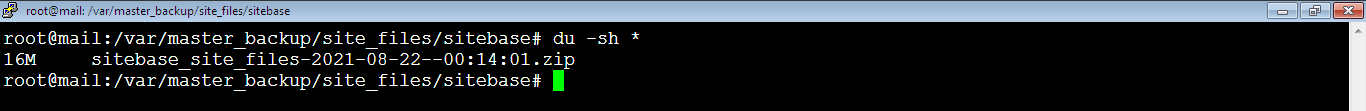

You can make sure that the folders zipped have something inside by checking the readable size of the zip file. First, we need to enter any of the backup directories, we chose sitebase directory by running the below command:

root@mail:~# cd /var/master_backup/site_files/sitebase

Then, run the below command to list the size of the zipped file inside the sitebase backup directory:

root@mail:/var/master_backup/site_files/sitebase# du -sh *

As you see, the size is 16M.

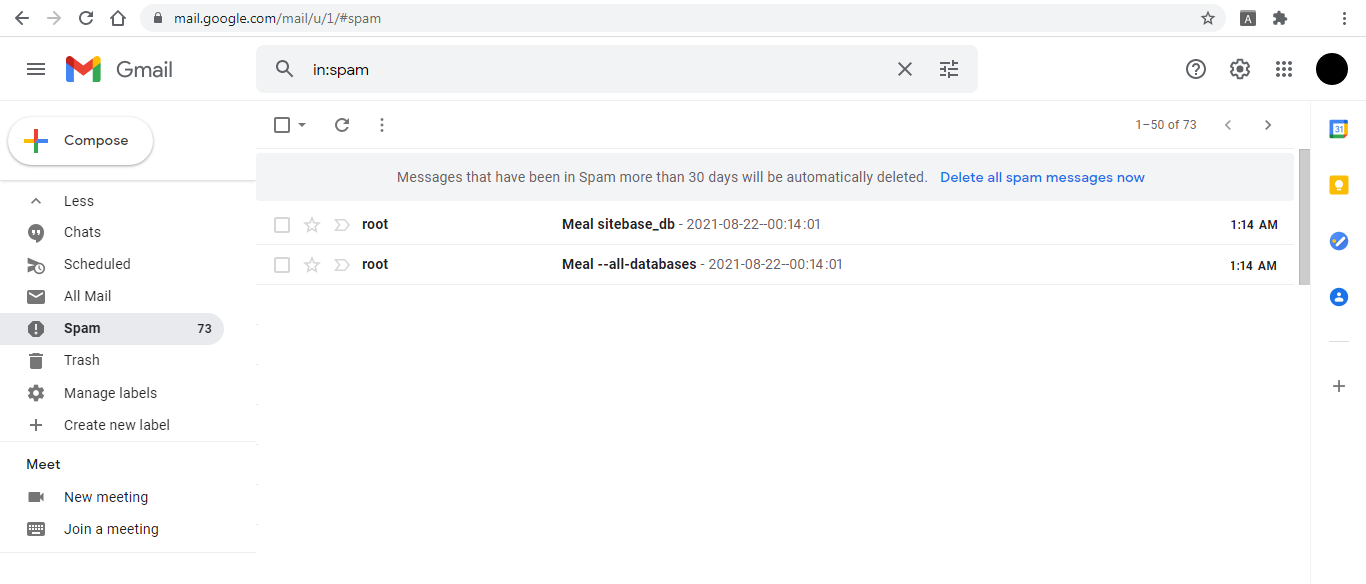

7. Check your email spam folder to verify the delivery of the databases backup, sometimes it takes up to 5 minutes so please wait.